November 19, 2019

![]()

While we wait for Oculus to release the Finger Tracking SDK for the Quest, I’m playing around with HTC Vive’s implementation of hand and finger tracking to get a feel for the new input method and what advantages it unlocks!

Watch this space, more videos coming.

Connect with iTRA to discuss your next project.

August 16, 2019

iTRA are in the development stage of a new App named “Tager” which allows the user to electronically tag items (hence the name). Currently, development is targeting a process to support and enhance the electronic PTW system and also as a potential replacement of QR codes.

The App can be used on any (intrinsically safe) mobile device, with Tag entries directly visible to the process controller / Permit Authority.

The App essentially works on item and environment recognition. Once the designated item is identified, the user applies the App (tap of a screen) with that “Tag” transferred directly to a database or control system.

Whilst the existing Permit to Work system remains essentially unchanged, Tager would add an additional layer of control. A Permit Authority, using a device, applies the Tag to the process, plant or equipment, linked to relevant supporting documentation. This Tag can only be applied and removed at the PTW site, not remotely.

The electronic Tag interfaces with the existing PTW but also locks out the process, plant or equipment. The process / plant / equipment cannot be re energised until the electronic Tag is removed by the Permit Authority.

Tager may also be used to enhance or replace the more traditional QR code. Tager would electronically interface with existing processes and not only provide direct visible access to information imbedded in a QR code but overcomes a significant QR Code weakness - longevity of the Code in harsh environments.

Early days, but the results are promising.

Connect with iTRA to discuss your next project.

June 25, 2019

Not surprisingly, nothing went as smoothly as I was hoping. Once I got into Unity and began the process of converting our current PC VR projects to Android, I was met with a mix of Unity bugs, bad setting configurations and just crashing for reasons that I have yet to figure out.

I only had a very short window to get something up and running on the Oculus Quest before I had to move on to other projects. I was looking for a quick turnaround so I attempted to convert our most basic package to the new platform. After waiting for what felt like hours for Unity to switch over to the Android platform, I removed all the old cameras from the scene and installed the latest Oculus SDK. I published it to the Quest and to no one’s surprise, it crashed! Instead of spending too long debugging this I decided to start fresh with a new Unity project, import the models and publish again.

While I was creating the new project, I noticed something new, Unity has a new render pipeline specifically designed for low powered devices. I did a little research into it and got really excited; they were promising up to 100% gains in performance. Knowing how unoptimized the current render pipeline is, I believed them. But of course, nothing is that easy. There seems to be a bug with the package when exporting it to Android. Right at the end of the build process an error would pop up, “Return of the style/VrActivityTheme not found in AndroidManifest.xml” great! I did a bit of research and came across this forum thread. The general consensus seems to be that it will get patched in future releases of Unity. As seems to be the theme of this post, I didn’t have time to look further into the issues and since it is not critical to what I am wanting to achieve, I’ll put the new pipeline on the back burner. I will definitely keep an eye on it though as I’m not one to ignore a free performance increase!

Back to just trying to get anything to run on the Quest. I created a new project and started with just a cube and the Oculus SDK. I published it to the Quest and I was a little surprised this time, it still crashed instantly. I expected that the default Unity configuration combined with the Oculus SDK would be enough to get it going, I guess I was mistaken. Back to google, and it looks like I’m not the only one having these issues. I found the answer in this thread. It turns out Unity defaults to an incompatible render API. I removed Vulkan from the list and it worked! Now all I needed to do was import my model into the scene and publish to the Quest. You can see the fruits of my labour below. It performed excellently! I’m very impressed.

Just to give you some context to how amazing this is, this model is a to scale 3D model of my house. The reason I am so blown away by the demo is that I am actually walking around my house when I recorded this video. The Oculus Quest tracking did not drift even the slightest bit. I managed to walk through every room in my house, while wearing the headset, without bumping into any walls! Just as another note, no I didn’t put any time into lighting the scene, I just used what I had set up in Cinema 4D. Ignoring that the scene is a little blown out, I am surprised how great the scene looks and how well it ran with these unoptimized models.

Connect with iTRA to discuss your next project.

June 19, 2019

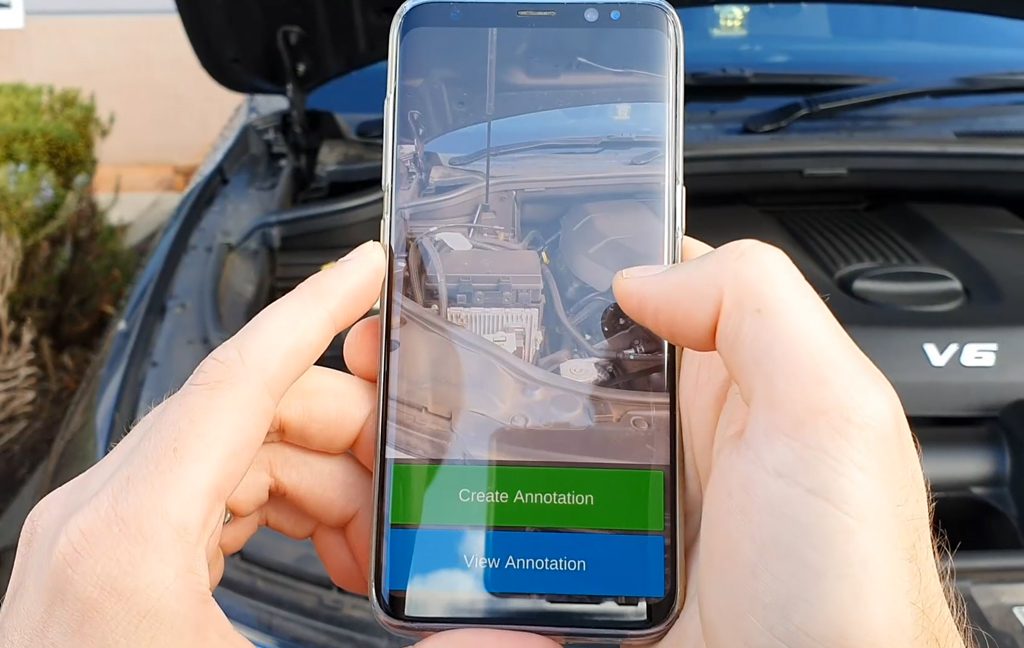

Place persistent AR markers in the real environment and share them across devices for collaboration. No ugly QR or AR stickers needed. The app recognises your environment and loads previously created tags from cloud.

Applications made with this technology connect the physical world with digital assets and will change how we live and work. The possibilities are endless, tags can show real-time data from SCADA or IoT systems too.

Watch this space, more videos coming.

Connect with iTRA to discuss your next project.

June 4, 2019

Since Oculus announced the shipping date of the Quest in April, I have been eagerly awaiting the arrival of our headset. It’s inside-out approach to tracking is intriguing and with the prospect of no more umbilical cord attached to my head, there is a lot to be excited about.

Everyone who has tried Virtual Reality with us has been really excited by what the technology is capable of, not only now, but what it has the potential to become. A huge draw back thus far has been the cost of the device, coupled with the need for a beefy gaming computer to run the software. This is why the Quest has got everyone, including myself, so excited. Without the need to do the computing externally the barrier to entry is dramatically reduced.

The plan is to port all of our existing applications to the Quest, without giving up too much of the quality and complexity in our scenes. Given that we are essentially porting to a mobile processor, this is going to be a big challenge.

I have been working with the Oculus Rift and HTC Vive for the past 3 years using the Unity Engine. It has been great having the performance of a desktop computer powering the headset, but sometimes it is still a struggle to get certain experiences to run smoothly on these devices. I am going to need to double down on optimization, both for the models and code if I want to get these applications running smoothly on the Quest.

Before I begin this daunting task, I want to play around with a 3rd party application, VRidge Riftcat, which I’ve been following ever since I got my first Google Cardboard. The software essentially renders the game on your desktop computer and streams it over WIFI to another device, be it a phone in Google Cardboard or a Gear VR. This is a great solution for having high performance experiences in cheap, portable headsets.

After playing around with Riftcat I am quite impressed. They managed to include full Steam VR compatibility, including their room scale guardian system with 6 DOF controllers. This must not have been an easy accomplishment. Unfortunately, this is clearly still a beta. The tracking was flawless (apart from a little latency, but I can forgive them for that) but the quality of the visual stream was a bit lackluster. It suffered from numerous dropped frames and image compression if the WIFI signal degraded even a little. I am going to keep an eye on the project in hopes that they overcome these shortcomings, but for now, I can’t recommend this to clients as a solution.

It looks like I better open Unity and get porting!

Connect with iTRA to discuss your next project.

April 2, 2019

Building upon the success of the VR Prestart Light Vehicle

Check which rolled out late last year, we have been working with one of our clients to enhance their Fire Extinguisher training by creating 3 Virtual Reality scenarios for their employees to experience.

While giving the trainees (virtual) hands on time with the extinguishers to better prepare them in the case of an emergency situation, our client also gains insight into their employees’ knowledge of which extinguishers to use and their effectiveness at extinguishing a fire. This data is collected throughout the 3 scenarios and sent back to TrainTrac for analysis. As an added bonus, the data is also presented to the trainee as a score which is added to a site wide leader board. This encourages competition between the employees and an incentive to do the training again to beat their co-workers score.

During the prototype stages of the project, we built a similar experience for the HoloLens, where a pallet fire would be placed in the real world using Augmented Reality and a trainee would use a virtual fire extinguisher to extinguish the fire. The benefits of this is that you can practice each scenario in the actual location that a real-world fire would occur. However, this approach does have added complexities and lacks the immersion that Virtual Reality provides. Ultimately our client ended up going for the Virtual Reality approach.

You can see demos of our other Virtual Reality showcases on our Virtual Reality page.

Connect with iTRA to discuss your next project.

Resources

Terms and ConditionsABN: 67119 274 181